Hello, and welcome back to my Tales of Machine learning series, where I detail my journey on researching Supervised Learning and neural networks (NNs).

One of the struggles early struggles I had in learning ML was trying to mathematically and visually understand the process that NNs use to “learn” from their mistakes. The process that I am talking about is known as backpropagation. Before I get into the story, I need to provide context as to what backpropagation actually is, in the big pipeline of supervised learning.

For context…

I was building a flower image classifier: a NN that can take an image of a flower and output its prediction as to what type of flower it is.

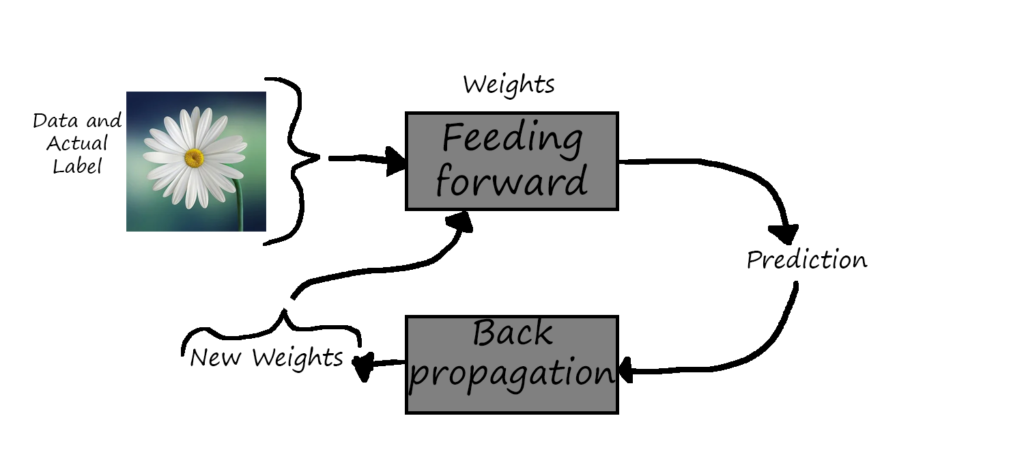

Feeding forward is the algorithm used to apply “weights” -numbers used to determine relative “importance”- on input data. The output is a vector of probabilities, with the highest entry being the most likely category.

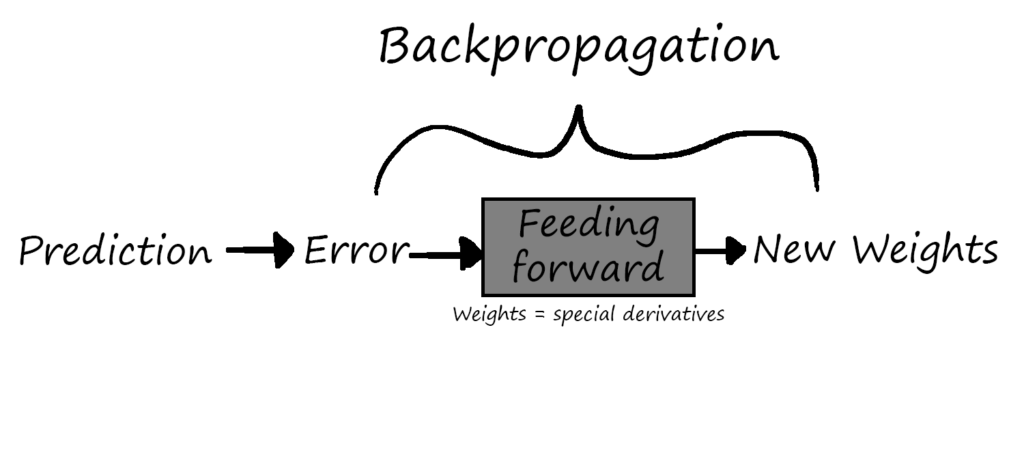

Backpropagation is the algorithm that changes these weights to make better image predictions in the future. It calculates the error of the prediction from the actual label and makes corrections. The smaller the error, the less the weights change.

Both algorithms use lots of matrix multiplication, which will not be covered in this post.

See the image of the general pipeline of feeding forward and backpropagation below:

The Challenge…

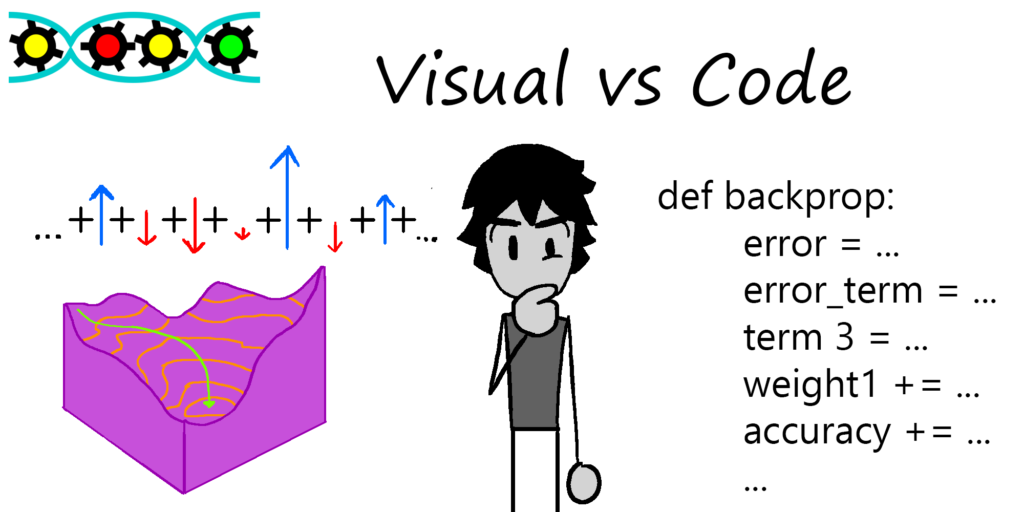

What made backpropagation so difficult to learn was that I learned two extreme interpretations of it.

The first was through 3 Blue 1 Brown‘s videos on gradient descent, the heart and driving mechanism of backpropagation. 3B1B is an excellent instructor who teaches the purpose of certain concepts before going straight into their intricacies. He is so valuable that even the Udacity Nanodegree program used his videos to introduce backpropagation.

His almost purely visual approach was helpful at showing the significance of gradient descent. However, what ended up stumping me was that as he discussed the roll of gradient descent for each individual piece of data accross an entire test set, the number of arrows and visuals used became more distracting. It became too cluttered for me to keep up.

That is when I looked towards Udacity’s more compressed and mathematical code implementation of backpropagation.

When the nanodegree provided me the task of finishing prewritten code for the backpropagation, I ended up facing the opposite problem, there was not enough visual association for the terms provided. In particular, I struggled with discerning the significance between variables like “error” and “error term“. They seemed to serve similar purposes. I ended up being very confused.

My Solution

Between these two interpretations of backpropagation, I ended up discovering my own unique interpretation:

Instead of trying to think of backpropagation as its own unique and unknown algorithm, I should think of it as the feeding forward algorithm but with error as inputs and derivatives as weights. The analogous “prediction” is the new set of weights for future actual feeding forward passes. See the image below for my general interpretation:

What to Take Away From This

What the Udacity Nanodegree program allowed me to do is wrangle both the mathematical and visual perspectives of machine learning and twist them into a fused interpretation all of my own.

Although I did not go into much detail on the mathematical implementation or code details in this post, I will be discussing the actual math and covering this challenge a lot more thoroughly once I begin to discuss Machine Learning. Be sure to stay tuned for updates regarding this course.

Thank you!

Thank you for reading the second post in this series. To read the previous or next blog, click on the appropriate link below:

Until next time, keep building and stay creative!

Cool thoughts here

Pingback: Tales of Machine Learning #3: "Home Recipes" - gearsngenes